k8s高可用集群安装教程

作者:qq_42799562

本文给大家介绍k8s高可用集群安装教程,本文通过图文示例相结合给大家介绍的非常详细,感兴趣的朋友一起看看吧

一、安装负载均衡器

k8s负载均衡器 官方指南

1、准备三台机器

| 节点名称 | IP |

|---|---|

| master-1 | 192.168.1.11 |

| master-2 | 192.168.1.12 |

| master-3 | 192.168.1.13 |

2、在这三台机器分别安装haproxy和keepalived作为负载均衡器

# 安装haproxy sudo dnf install haproxy -y # 安装Keepalived sudo yum install epel-release -y sudo yum install keepalived -y # 查看安装成功信息 sudo dnf info haproxy sudo dnf info keepalived

3、k8s负载均衡器配置文件

官方指南 按需替换成自己的机器ip和端口即可,192.168.1.9 是为keepalived提供的虚拟ip,只要该ip没有被占用,均可,从节点将MASTER改为BACKUP,priority 101改成100,让MASTER占比大

3.1 /etc/keepalived/keepalived.conf

! /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 101

authentication {

auth_type PASS

auth_pass 42

}

virtual_ipaddress {

192.168.1.9

}

track_script {

check_apiserver

}

}3.2 /etc/keepalived/check_apiserver.sh

#!/bin/sh

errorExit() {

echo "*** $*" 1>&2

exit 1

}

curl -sfk --max-time 2 https://localhost:6553/healthz -o /dev/null || errorExit "Error GET https://localhost:6553/healthz"3.3 授予脚本权限

chmod +x /etc/keepalived/check_apiserver.sh

3.4 /etc/haproxy/haproxy.cfg

# /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

log stdout format raw local0

daemon

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 1

timeout http-request 10s

timeout queue 20s

timeout connect 5s

timeout client 35s

timeout server 35s

timeout http-keep-alive 10s

timeout check 10s

#---------------------------------------------------------------------

# apiserver frontend which proxys to the control plane nodes

#---------------------------------------------------------------------

frontend apiserver

bind *:6553

mode tcp

option tcplog

default_backend apiserverbackend

#---------------------------------------------------------------------

# round robin balancing for apiserver

#---------------------------------------------------------------------

backend apiserverbackend

option httpchk

http-check connect ssl

http-check send meth GET uri /healthz

http-check expect status 200

mode tcp

balance roundrobin

server master-1 192.168.1.11:6443 check verify none

server master-2 192.168.1.12:6443 check verify none

server master-3 192.168.1.13:6443 check verify none

# [...]3.5 验证haproxy.cfg是否有语法错误,并重启

haproxy -c -f /etc/haproxy/haproxy.cfg systemctl restart haproxy systemctl restart keepalived

二、安装k8s集群

基础配置,请参照我的上一篇单主节点执行

1、堆叠(Stacked)etcd 拓扑

直接执行初始化即可

优点:操作简单,节点数要求少

缺点:堆叠集群存在耦合失败的风险。如果一个节点发生故障,则 etcd 成员和控制平面实例都将丢失, 并且冗余会受到影响。

kubeadm init --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \ --apiserver-advertise-address=192.168.1.11 \ --control-plane-endpoint 192.168.1.9:6553 \ --pod-network-cidr=10.244.0.0/16 \ --service-cidr 10.244.0.0/12 \ --kubernetes-version=v1.23.8 \ --upload-certs \ --v=6

2、外部 etcd 拓扑

优点:拓扑结构解耦了控制平面和 etcd 成员。因此它提供了一种 HA 设置, 其中失去控制平面实例或者 etcd 成员的影响较小,并且不会像堆叠的 HA 拓扑那样影响集群冗余

缺点:拓扑需要两倍于堆叠 HA 拓扑的主机数量。 具有此拓扑的 HA 集群至少需要三个用于控制平面节点的主机和三个用于 etcd 节点的主机 官方指南

2.1 准备三台机器

| 节点名称 | IP |

|---|---|

| etcd-1 | 192.168.1.3 |

| etcd-2 | 192.168.1.4 |

| etcd-3 | 192.168.1.5 |

2.2 每个etcd节点创建配置文件/etc/systemd/system/kubelet.service.d/20-etcd-service-manager.conf

[Service] ExecStart= # 将下面的 "systemd" 替换为你的容器运行时所使用的 cgroup 驱动。 # kubelet 的默认值为 "cgroupfs"。 # 如果需要的话,将 "--container-runtime-endpoint " 的值替换为一个不同的容器运行时。 ExecStart=/usr/bin/kubelet --address=127.0.0.1 --pod-manifest-path=/etc/kubernetes/manifests --cgroup-driver=systemd Restart=always

2.3 启动kubelet

systemctl daemon-reload systemctl restart kubelet # 查看kubelet状态,正常应变为running systemctl status kubelet

2.4 使用以下脚本文件启动,注意替换自己的IP和主机名

# 使用你的主机 IP 替换 HOST0、HOST1 和 HOST2 的 IP 地址,在etcd-1 上执行以下命令:

export HOST0=192.168.1.3

export HOST1=192.168.1.4

export HOST2=192.168.1.5

# 使用你的主机名更新 NAME0、NAME1 和 NAME2

export NAME0="etcd-1"

export NAME1="etcd-2"

export NAME2="etcd-3"

# 创建临时目录来存储将被分发到其它主机上的文件

mkdir -p /tmp/${HOST0}/ /tmp/${HOST1}/ /tmp/${HOST2}/

HOSTS=(${HOST0} ${HOST1} ${HOST2})

NAMES=(${NAME0} ${NAME1} ${NAME2})

for i in "${!HOSTS[@]}"; do

HOST=${HOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/${HOST}/kubeadmcfg.yaml

---

apiVersion: "kubeadm.k8s.io/v1beta3"

kind: InitConfiguration

nodeRegistration:

name: ${NAME}

localAPIEndpoint:

advertiseAddress: ${HOST}

---

apiVersion: "kubeadm.k8s.io/v1beta3"

kind: ClusterConfiguration

etcd:

local:

serverCertSANs:

- "${HOST}"

peerCertSANs:

- "${HOST}"

extraArgs:

initial-cluster: ${NAMES[0]}=https://${HOSTS[0]}:2380,${NAMES[1]}=https://${HOSTS[1]}:2380,${NAMES[2]}=https://${HOSTS[2]}:2380

initial-cluster-state: new

name: ${NAME}

listen-peer-urls: https://${HOST}:2380

listen-client-urls: https://${HOST}:2379

advertise-client-urls: https://${HOST}:2379

initial-advertise-peer-urls: https://${HOST}:2380

EOF

done2.5 在任意etcd节点生成证书

kubeadm init phase certs etcd-ca #这一操作创建如下两个文件: #/etc/kubernetes/pki/etcd/ca.crt #/etc/kubernetes/pki/etcd/ca.key

2.6 为每个成员创建证书

kubeadm init phase certs etcd-server --config=/tmp/${HOST2}/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/${HOST2}/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST2}/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST2}/kubeadmcfg.yaml

cp -R /etc/kubernetes/pki /tmp/${HOST2}/

# 清理不可重复使用的证书

find /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -delete

kubeadm init phase certs etcd-server --config=/tmp/${HOST1}/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/${HOST1}/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST1}/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST1}/kubeadmcfg.yaml

cp -R /etc/kubernetes/pki /tmp/${HOST1}/

find /etc/kubernetes/pki -not -name ca.crt -not -name ca.key -type f -delete

kubeadm init phase certs etcd-server --config=/tmp/${HOST0}/kubeadmcfg.yaml

kubeadm init phase certs etcd-peer --config=/tmp/${HOST0}/kubeadmcfg.yaml

kubeadm init phase certs etcd-healthcheck-client --config=/tmp/${HOST0}/kubeadmcfg.yaml

kubeadm init phase certs apiserver-etcd-client --config=/tmp/${HOST0}/kubeadmcfg.yaml

# 不需要移动 certs 因为它们是给 HOST0 使用的

# 清理不应从此主机复制的证书

find /tmp/${HOST2} -name ca.key -type f -delete

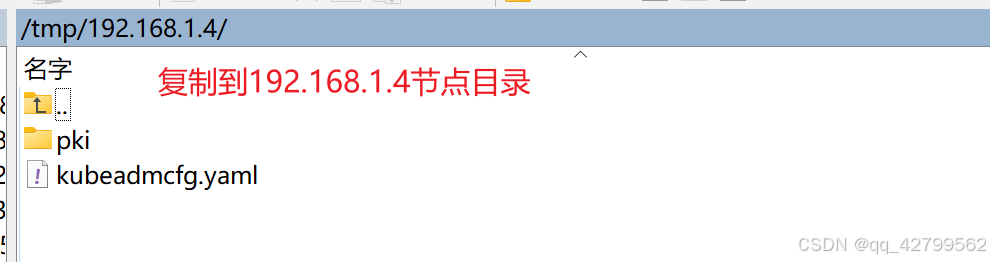

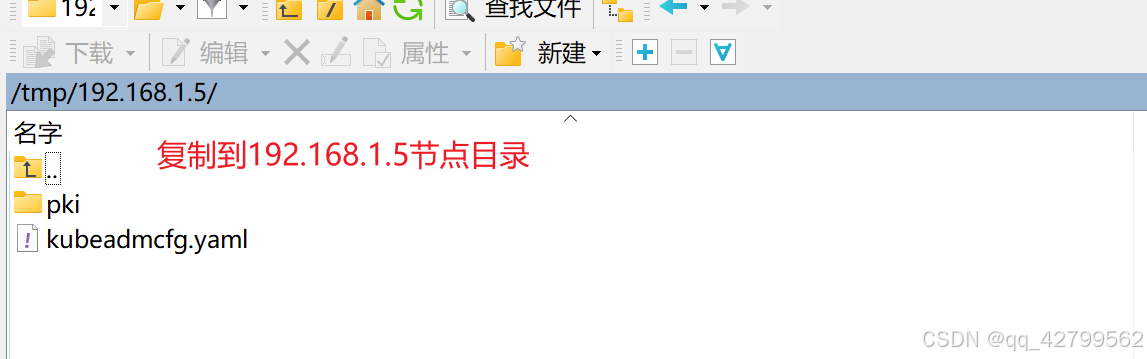

find /tmp/${HOST1} -name ca.key -type f -delete2.7 证书已生成,现在必须将它们移动到对应的主机。复制tmp下各自节点证书目录pki至/etc/kubernetes/

2.8 在对应的etcd节点分别执行,按需取用和替换自己的etcd节点IP

# 镜像处理 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 k8s.gcr.io/pause:3.6 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 k8s.gcr.io/etcd:3.5.1-0 sudo systemctl daemon-reload sudo systemctl restart kubelet kubeadm init phase etcd local --config=/tmp/192.168.1.3/kubeadmcfg.yaml #kubeadm init phase etcd local --config=/tmp/192.168.1.4/kubeadmcfg.yaml #kubeadm init phase etcd local --config=/tmp/192.168.1.5/kubeadmcfg.yaml

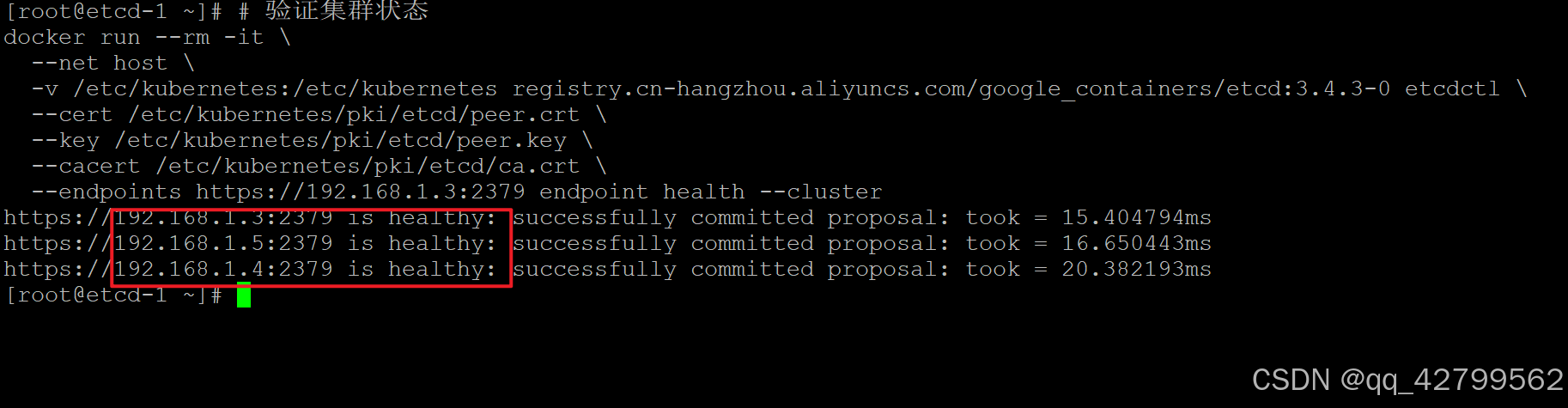

2.9 验证etcd集群

# 验证集群状态 docker run --rm -it \ --net host \ -v /etc/kubernetes:/etc/kubernetes registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 etcdctl \ --cert /etc/kubernetes/pki/etcd/peer.crt \ --key /etc/kubernetes/pki/etcd/peer.key \ --cacert /etc/kubernetes/pki/etcd/ca.crt \ --endpoints https://192.168.1.3:2379 endpoint health --cluster

3、 配置完etcd集群,就在第一个节点配置k8s集群启动文件 config kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

imageRepository: "registry.cn-hangzhou.aliyuncs.com/google_containers"

localAPIEndpoint:

advertiseAddress: 192.168.1.11

uploadCerts: true

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

imageRepository: "registry.cn-hangzhou.aliyuncs.com/google_containers"

kubernetesVersion: v1.23.8

controlPlaneEndpoint: "192.168.1.9:6553"

networking:

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.244.0.0/12"

etcd:

external:

endpoints:

- https://192.168.1.3:2379

- https://192.168.1.4:2379

- https://192.168.1.5:2379

caFile: /etc/kubernetes/pki/etcd/ca.crt

certFile: /etc/kubernetes/pki/apiserver-etcd-client.crt

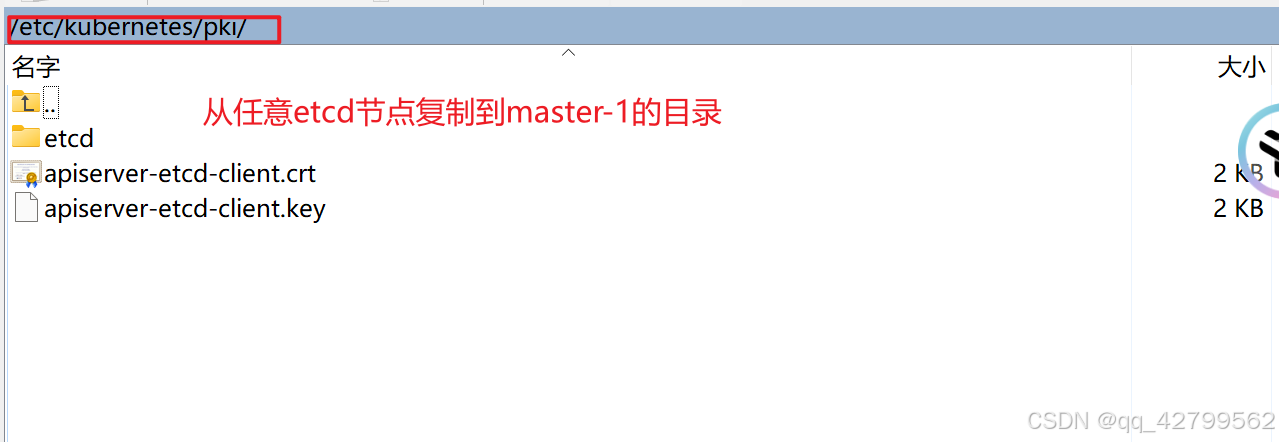

keyFile: /etc/kubernetes/pki/apiserver-etcd-client.key4、从任意etcd节点,复制/etc/kubernetes/pki目录文件到初始化集群的k8s节点

kubeadm init --config kubeadm-config.yaml --upload-certs --v=6

# 主节点加入

kubeadm join 192.168.1.9:6553 --token a26srm.c7sssutz83mz94lq \

--discovery-token-ca-cert-hash sha256:560139f5ea4b8d3a279de53d9d5d503d41c29394c3ba46a4f312f361708b8b71 \

--control-plane --certificate-key b6e4df72059c9893d2be4d0e5b7fa2e7c466e0400fe39bd244d0fbf7f3e9c04c# 从节点加入

kubeadm join 192.168.1.9:6553 --token a26srm.c7sssutz83mz94lq \

--discovery-token-ca-cert-hash sha256:560139f5ea4b8d3a279de53d9d5d503d41c29394c3ba46a4f312f361708b8b71安装flannel网络插件

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

name: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

kind: ConfigMap

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-cfg

namespace: kube-flannel

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

name: kube-flannel-ds

namespace: kube-flannel

spec:

selector:

matchLabels:

app: flannel

k8s-app: flannel

template:

metadata:

labels:

app: flannel

k8s-app: flannel

tier: node

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

containers:

- args:

- --ip-masq

- --kube-subnet-mgr

command:

- /opt/bin/flanneld

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

image: registry.cn-hangzhou.aliyuncs.com/1668334351/flannel:v0.26.4

name: kube-flannel

resources:

requests:

cpu: 100m

memory: 50Mi

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

privileged: false

volumeMounts:

- mountPath: /run/flannel

name: run

- mountPath: /etc/kube-flannel/

name: flannel-cfg

- mountPath: /run/xtables.lock

name: xtables-lock

hostNetwork: true

initContainers:

- args:

- -f

- /flannel

- /opt/cni/bin/flannel

command:

- cp

image: registry.cn-hangzhou.aliyuncs.com/1668334351/flannel-cni-plugin:v1.6.2

name: install-cni-plugin

volumeMounts:

- mountPath: /opt/cni/bin

name: cni-plugin

- args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

command:

- cp

image: registry.cn-hangzhou.aliyuncs.com/1668334351/flannel:v0.26.4

name: install-cni

volumeMounts:

- mountPath: /etc/cni/net.d

name: cni

- mountPath: /etc/kube-flannel/

name: flannel-cfg

priorityClassName: system-node-critical

serviceAccountName: flannel

tolerations:

- effect: NoSchedule

operator: Exists

volumes:

- hostPath:

path: /run/flannel

name: run

- hostPath:

path: /opt/cni/bin

name: cni-plugin

- hostPath:

path: /etc/cni/net.d

name: cni

- configMap:

name: kube-flannel-cfg

name: flannel-cfg

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lockkubectl apply -f kube-flannel.yml

到此这篇关于k8s高可用集群安装的文章就介绍到这了,更多相关k8s高可用集群安装内容请搜索脚本之家以前的文章或继续浏览下面的相关文章希望大家以后多多支持脚本之家!